OUR SERVICES

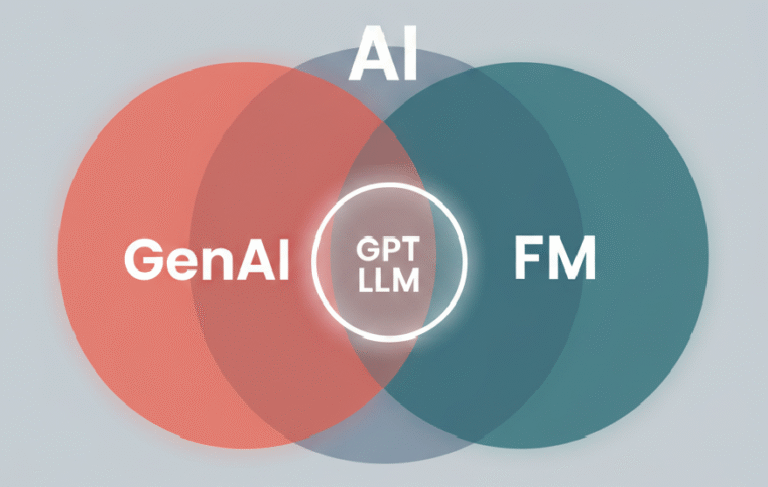

Sponsors of pharmaceuticals and biotechnology face growing demands to provide evidence faster, more affordably, and more transparently. Systematic reviews, model development, and dossier preparation long have been resource-intensive and scale slow HEOR activities. Generative AI has appeared on the scene not as some eventual development, but as a workable workhorse of faster timelines and greater analytic opportunity1-3. While the concept of using artificial intelligence to enhance human capability dates to the 1950s4, major strides came in the 1990s3 with the emergence of machine learning, improving how systems identify patterns and support decision-making. By the early 2000s, deep learning fueled by neural networks expanded AI’s capabilities into complex domains such as image interpretation and natural language understanding3,5.

One of the most notable milestones in AI-driven science came in 2021 when AlphaFold, DeepMind’s deep learning system, successfully predicted protein structures with high accuracy an advancement that accelerated drug discovery efforts6,7. This breakthrough was so impactful that it led to a Nobel Prize in Chemistry in 2024 for the scientists behind it8.

The development of foundation models (FMs) large, adaptable AI systems trained on vast amounts of unlabeled data has introduced a new generation of tools. Unlike earlier, task-specific algorithms, these models are designed to be general-purpose and adaptable across multiple domains9. The release of ChatGPT in late 2022 brought widespread attention to this shift, demonstrating how large language models (LLMs), a key type of FM, can generate relevant and coherent content based on simple prompts10.

In healthcare, these advancements are opening new doors, particularly in areas like evidence synthesis, health economic modeling, literature reviews, and the development of value communication materials. HTA agencies are beginning to respond to this shift. The UK’s NICE, for instance, has already outlined its preliminary approach to AI use in health technology assessments, including how generative AI may be incorporated into formal submissions11.

This article aims to support HEOR professionals as they adapt to the growing role of AI in their work. It breaks down the core concepts behind AI, machine learning, generative AI, and foundation models, highlights practical applications in HEOR, and shares best practices for using these technologies effectively.

Generative AI is no longer just a theoretical concept in HEOR, it’s starting to show real value in day-to-day work. Its applications are growing fast, from literature reviews and evidence synthesis to economic modeling and value communication. While the field is still evolving, early use cases already show how these tools can help streamline manual tasks and speed up workflows. Based on both recent experience and a review of current studies, the following examples highlight where generative AI is making a meaningful difference and where it’s still important to proceed with caution.

One of the earliest and most practical applications of foundation models (FMs) has been in systematic literature reviews (SLRs), a cornerstone of evidence generation in HEOR3. These reviews often involve time-intensive workflows: screening abstracts, assessing bias, extracting data, and in some cases, performing meta-analyses across large volumes of studies.

FMs are increasingly being tested across these steps. They can assist in crafting structured search strategies and applying predefined inclusion or exclusion criteria. Some have shown high accuracy in screening and classifying research articles, as seen in recent evaluations of GPT-4 for medical SLRs following PRISMA guidelines12.

Beyond screening, generative AI models can explain why studies are excluded, help with bias assessments using structured frameworks, and extract structured information like population, intervention, comparator, and outcome (PICO) elements from unstructured text. They are also capable of writing code (in R, Python, etc.) to automate meta-analyses and generate concise, comparative study summaries.

In network meta-analyses (NMAs), a common approach in HEOR to compare multiple treatment options, FMs show promise in extracting relevant variables, harmonizing input data, and creating interpretable outputs. In one instance, GPT-4 demonstrated over 99% accuracy replicating extraction from multiple NMA datasets13.

While these applications are encouraging, limitations remain. AI hallucinations (plausible sounding but fabricated outputs), inconsistent classification, and errors in data extraction continue to require human oversight. Until standardized benchmarks for evaluating AI-generated outputs are established, expert validation will remain critical to ensuring scientific rigor.

Generative AI is also beginning to influence health economic modeling, offering new ways to conceptualize, replicate, and adapt models for diverse decision-making contexts. Foundation models can summarize existing economic evaluations, identify and extract relevant parameters, and reduce time spent sourcing data inputs.

In some studies, these tools have reconstructed widely used models, for example, multi-state partition survival models in oncology and cost-effectiveness analyses in infectious disease. These replications included structural mapping, parameter retrieval, script generation, and preliminary validation all driven by AI from publicly available literature.

Although most current examples involve relatively simple models, the potential applications are far broader. Future use cases might include validating economic models by comparing them with real-world data, adapting models to specific geographies or patient populations, and even automating transitions between platforms (like Excel to R Shiny).

Generative AI could also lighten the burden of tasks like structural uncertainty analysis, an area known for its complexity and resource intensity by streamlining parts of the workflow and enabling faster iterations.

Even with all these advances, human judgment remains crucial. In economic modeling especially when submitting to HTA agencies transparency, reproducibility, and accuracy are non-negotiable. Until we have stronger evaluation frameworks in place, generative AI should be viewed as a powerful support tool, not a substitute for the expertise of health economists.

Generative AI and FMs are becoming valuable tools in generating real-world evidence (RWE), especially by helping teams work with real-world data faster, at a larger scale, and with greater precision. A big challenge, though, is that much of the data in electronic health records (EHRs) is unstructured hidden in physician notes, discharge summaries, or diagnostic reports which makes it hard to use for research without extra processing

Structured EHR is relatively easy to analyze, but the deeper clinical insights are often buried in unstructured text like doctor’s notes or discharge summaries. While natural language processing (NLP) tools have made progress in tackling this issue, generative AI takes things further. It can pull meaningful information from unstructured records and turn it into formats that are easier to work with, helping reduce manual effort and lower the risk of human error.

For example, FMs have been shown to accurately pull biomarker testing information from clinical documents. That said, performance is not always consistent. In one study, generative models failed to reliably map descriptive language to medical coding systems, showing an accuracy rate below 50%14. However, those models had not undergone fine-tuning, something that has been shown to substantially improve performance.

To improve reliability, researchers are turning to domain-specific models like GatorTron, NYUTron, and Me LLaMA, which are trained on large datasets of clinical text14. Prompt engineering fine-tuning how questions and tasks are framed for generative models like GPT-3.5 and GPT-4 also plays a role in improving outcomes.

Another promising area is the integration of multimodal data. By combining structured EHRs with imaging data, genomic information, and clinical text, generative AI can provide deeper and more nuanced insights. A notable example includes AI systems that accurately forecasted COVID-19 hospitalizations using real-time textual data and genomic surveillance, something traditional forecasting tools could not do.

While there are still challenges with data accuracy and consistency, ongoing advances in specialized models and multimodal techniques are steadily pushing the field toward more reliable, high-quality RWE datasets. This evolution is essential for informing value-based assessments of healthcare interventions more comprehensively.

At the same time, beginning to make a real impact in simplifying the preparation of value dossiers and other reporting documents for pharmaceutical submissions. These tools are especially helpful for writing tasks that require a specific format, matching the tone of existing documents, and clearly summarizing key findings.

By automating evidence collation and document drafting, generative models can save teams significant time and effort during dossier development. Their language flexibility also makes them suitable for creating region-specific submissions, supporting global regulatory and HTA engagement.

Generative AI is also being explored for its ability to tailor communication creating content for different stakeholder audiences. From technical documentation to patient-friendly summaries, these tools are proving useful in personalizing messages and simplifying complex data.

However, caution is essential. Foundation models can sometimes generate misleading or factually incorrect content, especially when dealing with detailed scientific material. These inaccuracies if unchecked can pose serious credibility risks for regulatory submissions. As such, thorough human review and strong validation processes remain non-negotiable.

Generative AI has a lot of potential in HEOR, but many of its applications are still in the early stages. Among the different use cases, systematic literature reviews seem to be the most advanced so far. Other areas like economic modeling and automating value dossiers are showing progress, but they still need more testing to prove they can deliver reliable, consistent results.

One of the biggest challenges with gen-AI is figuring out how to judge the quality of its outputs. The results can vary a lot depending on how someone uses the model stronger prompts usually lead to better results. That means the user’s skill and experience play a big role. It raises an important question: how much of the output is really the model’s doing, and how much comes from the person guiding it?

Rather than trying to separate these influences, it may be more practical to develop objective performance metrics for evaluating generative AI outputs regardless of who is operating them. Several such frameworks are already in development and may become valuable for both users and reviewers in assessing content generated for regulatory or scientific use.

As generative AI tools become more integrated into HEOR, it is important to focus on how we can actually make these tools work better in real-world scenarios. This section looks at hands-on strategies like prompt engineering, retrieval-augmented generation (RAG), fine-tuning models, and using domain-specific foundation models. These techniques are playing a growing role in helping AI deliver more accurate, relevant, and trustworthy results within HEOR.

Prompt engineering is essentially the art of asking the right questions in the right way. By carefully crafting how inputs are phrased, users can guide large language models (LLMs) to generate clearer, more targeted responses. There are a few strategies commonly used15:

These techniques are already being used in real-world HEOR applications like systematic literature reviews and economic modeling. Still, each has its limitations. For example, zero- or few-shot prompts can miss subtle context, while chain-of-thought can become overly wordy. Persona-based prompts require a strong grasp of how different experts communicate, which can be tough to replicate consistently.

Fine-tuning involves retraining an existing model on a focused dataset to make it better at a specific task. For example, a general model might be fine-tuned using question–answer pairs or task-specific instructions to perform better in medical literature reviews or health economic evaluations.

One form of this is instruction tuning, where models learn from curated question–answer examples. Another is reinforcement learning from human feedback (RLHF)16, where human reviewers help improve output quality over time. There are also self-improving loops, where the model adapts its responses based on feedback from previous answers.

In HEOR, a good example of fine-tuning in action is Bio-SIEVE, a model designed to help screen studies for systematic reviews. It was built using instruction-tuned versions of Llama and Guanaco and can determine whether a study should be included based on set criteria.

While fine-tuning can dramatically improve performance, it is not always easy. It often demands serious computing power, expert oversight, and careful planning to avoid overfitting the model to one narrow task, making it less useful in other areas.

Unlike general-purpose models, domain-specific FMs are trained on data from one area like healthcare or biomedical research. This helps the model develop a deeper understanding of the terminology, context, and types of questions common in that field.

Examples include:

These models often outperform general ones on tasks like identifying medical terms or summarizing clinical texts. But there is a trade-off: the cost and complexity of training such models can be too high for smaller teams or institutions. Some models, like GatorTron, are open access under certain licenses, helping reduce this barrier somewhat.

RAG is a method that allows generative AI tools to combine their broad, pre-trained knowledge with real-time or domain-specific data. Instead of relying only on what the model already “knows,” it can pull in external information to produce more accurate and up-to-date results.

This is especially useful in fast-evolving fields like healthcare, where relying on older, static training data can limit accuracy. For example, a model using RAG might verify claims against external databases or live web sources before generating a response.

That said, RAG is not perfect. If the system pulls conflicting or low-quality information, the final answer can suffer. How well the system handles this kind of inconsistency is an active area of research.

Agent-based generative AI is a step beyond single-task LLMs. These systems are designed to carry out multi-step tasks independently by combining an LLM with tools like memory, logic chains, access to external databases, and the ability to interact with other agents.

Frameworks like LangChain and LangGraph make it easier to build such intelligent agents. For instance, LangGraph allows for stateful, multi-step reasoning across multiple agents—helping simulate more complex decision-making processes.

Another example is GPT Researcher, an AI agent that conducts in-depth online research and outputs structured reports with citations. In HEOR, agents can automate time-consuming workflows like evidence synthesis, economic modeling, or dossier preparation. These tools can reduce manual effort and improve consistency.

However, creating these agents is not plug-and-play. Developers need strong technical expertise, and there is still work to be done to make outputs fully transparent, reproducible, and trustworthy.

While generative AI has opened new possibilities for HEOR, it is important to stay grounded in its current limitations. From issues of scientific rigor and reproducibility to concerns around bias, privacy, and integration challenges, there are real factors that can affect how (and whether) these tools should be used. Let us break them down:

At the heart of any scientific method is the need for outputs to be both accurate and reproducible. This holds true for generative AI as well. Accuracy ensures that AI-generated results are factually correct, complete, and usable, while reproducibility allows others to verify those results under similar conditions.

Yet, AI models especially large language models do not always meet these standards. They can “hallucinate” facts, including making up citations or distorting scientific detail. In one real-world study, a model failed to accurately map free-text entries to clinical codes more than half the time. Another highlighted major inconsistency in disease progression modeling for hepatitis C.

To address this, techniques like prompt refinement and RAG have helped reduce hallucinations and improve context alignment. Domain-specific models like GatorTron14 and MeLLaMA17, trained on clinical datasets, also show better performance on complex healthcare tasks. But reproducibility remains tricky, largely because of how opaque AI models can be. Even small changes in input can lead to vastly different outputs.

Making generative AI more reproducible will require a cultural shift toward open source sharing of models, datasets, and evaluation methods, along with better documentation and transparency. For HEOR, frameworks specifically designed to evaluate and report on generative AI performance are a step in the right direction.Agent-based generative AI is a step beyond single-task LLMs. These systems are designed to carry out multi-step tasks independently by combining an LLM with tools like memory, logic chains, access to external databases, and the ability to interact with other agents.

Frameworks like LangChain and LangGraph make it easier to build such intelligent agents. For instance, LangGraph allows for stateful, multi-step reasoning across multiple agents—helping simulate more complex decision-making processes.

Another example is GPT Researcher, an AI agent that conducts in-depth online research and outputs structured reports with citations. In HEOR, agents can automate time-consuming workflows like evidence synthesis, economic modeling, or dossier preparation. These tools can reduce manual effort and improve consistency.

However, creating these agents is not plug-and-play. Developers need strong technical expertise, and there is still work to be done to make outputs fully transparent, reproducible, and trustworthy.

One major hurdle is assessing the quality of AI-generated content. As these tools become more embedded in HEOR workflows, it’s important to consider not only how effective they are, but also how fair. Bias in AI isn’t just a technical flaw, it can have real-world consequences. AI models can pick up and amplify the biases present in their training data. And in HEOR, where decisions may shape policy or determine patient access to treatment, fairness isn’t optional, it’s essential. AI models can pick up and amplify the biases present in their training data.

For example, one recent study found that an AI model could accurately predict a patient’s self-reported race from medical images, something even human experts could not do. But the researchers had no idea what features the model was using to make that prediction, raising concerns about transparency and fairness.

If models are indirectly using race or socioeconomic indicators in their decision-making, they risk reinforcing disparities in treatment or outcomes. That is why fairness audits, bias detection, and mitigation strategies such as adversarial training, data balancing, and fine-tuning with diverse datasets are essential.

Synthetic data, which can be designed to correct imbalances in real-world data, is also being explored as a solution. Research into bias in generative models applied to HEOR is still early but growing fast and it is likely to become a core focus soon.

Deploying generative AI in HEOR is not just about getting the technology right, it is about fitting that technology into complex systems governed by strict rules and high expectations.

Federated analytics—where models analyze data across sources without centralizing it, offers a promising path forward, maintaining privacy while still enabling meaningful analysis.

Organizations must weigh control against convenience, and that choice will shape how AI is adopted in HEOR.

To see more widespread adoption, the industry will need simpler interfaces and off-the-shelf tools tailored to specific HEOR functions. Training and upskilling HEOR professionals to work with these systems will be a key part of that journey.

Generative AI is reshaping what is possible in HEOR from speeding up literature reviews and automating parts of economic modeling, to delivering more dynamic and interactive insights, the potential is clear but so is the need for careful, thoughtful implementation.

There is still a lot of work to be done to ensure these tools are accurate, fair, reproducible, and operationally feasible. Techniques like prompt engineering, RAG, fine-tuning, and domain-specific modeling all help but adoption must be matched with thoughtful implementation, collaboration, and constant learning.

For HEOR professionals, embracing generative AI means stepping into a more dynamic, tech-enabled future, one where careful stewardship can lead to smarter decisions, better evidence, and ultimately, better health outcomes.